Prof. Geoffrey C. Fox

Director of the Northeast Parallel Architectures Center

Professor of Computer Science and Physics

111 College Place

Syracuse, New York 13244-4100, USA

Tel.: 315-443-2163, Fax: 315-443-4741

David Warner MD

Nason Fellow and Director of Institute for Interventional Informatics

111 College Place

Syracuse, New York 13244-4100, USA

Tel.: 315-443-2163, Fax: 315-443-1973

http://www.pulsar.org Email: davew@npac.syr.edu

Administrative point of contact:

Matthew E. Clark

Office of Sponsored Programs

113 Bowne Hall

Syracuse NY 13244-4100

Tel: 315-443-9356, Fax: 315-443-9361

Email: clarkme@syr.edu

A: Innovative claims

We introduce an Intermental Network as the UltraScale computer formed by the linkage of human minds coherently and synchronously to tackle a single problem together. This network can be used on its own or as part of a distributed system with other UltraScale computers. Essential to an Intermental net is a reversal of roles where humans are not users of computers but rather their linked minds constitute the parallel computer whose function is enhanced by interaction with conventional systems. Further, unlike a shared virtual environment, we are not trying to modulate an individual's perceptual state by an accurate representation of the physical world and the impact of users on it. Rather our main aim is more effective modulation of the physical environment from the actions of individuals cognizant of an accurate synthesis of the perceptual state of the linked minds.

We will design a system architecture and build a prototype system where individual bodies will be linked to a net through a rich sensory interface with over 30 channels. These signals will be filtered and presented as additional perceptual dimensions to the participating minds which will perceive either the states of individuals or their synthesis as one of more system-wide group perceptual states. This integration will use novel collaboration software, TangoInteractive, and be built using Web technologies, which ensure extensibility and ease of integration of new subsystems. We will stress performance, flexibility, scaling and fault tolerance in our Intermental architecture and explicit enhancements to TangoInteractive used in our prototype implementation. The human-computer interface is an adaption of the wearable computer system under development for the DARPA Bot-Masters project. The interface will be flexibly linked to the net with the visual network-based software system NeatTools that supports general filters.

We have identified three application areas that will drive requirements for our system architecture and are the basis of our prototype implementation. These areas are Special Operations, Battlefield Medical Intelligence and Crisis Management. Each of these offers tactical opportunities to use the linked minds to aid split second decisions. Further the Intermental net will continually modulate the perceptual state of individual decision-makers as time progresses and in this way we support longer-term strategic judgements.

Our base $300K per year, two-year proposal delivers a system architecture and prototype implementation. Options in years 2 and 3 allow integration with realistic distributed computer networks and significant application demonstrations.

The proposal team brings a very strong and perhaps unrivalled integration of talents to this interdisciplinary project. Fox is a pioneer in parallel computing and an expert in the general theory of complex systems. Podgorny and Fox have built several large web-based software systems including the sophisticated collaboration system TangoInteractive that is the core of the Intermental net's integration. Warner and Lipson have pioneered cost-effective innovative human-computer interfaces and designed and built the software NeatTools that allows them to rapidly prototype new approaches. We have expertise in the key motivating applications and will add the necessary collaborators in conventional metacomputers if the options are exercised.

Section B: Technical Rationale, Approach and Plan

New computers, communication and peripheral devices from biological, quantum, superconducting and other technologies promise UltraScale computing. One of the most obvious but still interesting biological computers is that represented by Nature's premier computer - the Human mind. There is the potential world (and space) wide linkage of billions of such human minds with large numbers of constructed devices (traditional computers) which form an Intermental network. We do not envisage this as a glorified next generation World Wide Web as this implies that each client (human mind) links essentially independently to a single server in a given transaction. The World's Wisdom is obtained by the incoherent sum of individual contributions. Rather as in a parallel computer, our Intermental computer will link entities coherently and synchronously together to tackle a single problem. Further as described below, our concept is a major extension of the interesting and still developing shared immersive virtual environments. In the latter, one represents the world classically by the actions of other people on it. In contrast, an Intermental net directly represents other participants through a rendering of their perceptual state.

An Intermental network reverses the traditional role of the human as the user of the computer system. Rather than the human as the usually asynchronous viewer of the computer's possibly parallel computations, we consider the conventional computer network as an aid to the parallel synchronous interactive Intermental network of linked minds. In another reversal, we are not trying to modulate an individual's perceptual state by an accurate representation of the physical world; rather our main aim is more effective modulation of the physical environment through an accurate synthesis of the perceptual state of the linked minds.

We use the term "mind" to mean "consciously experienced perceptual state-space". Thus the concept of "linked minds" of the Intermental network will refer to the capability of the network to modulate a coherent (phase consistent) co-perceptualization across an number of individual "minds" for the purpose of synthesizing a collective intelligence which will influence future iterations of a computational process. This Intermental linkage will allow the individual users to perceive the collective response dynamics of other minds while these minds reach states based on the knowledge of actions of the whole. Our multi-modal perception and expression systems (wearable computers), which are critical part of this proposal, are designed to enhance and optimize the inevitably imperfect representation of each mind's state as it is transmitted through digital filters and networks and seen by others in the Intermental net. This leads to each mind having additional perceptual dimensions corresponding to either the state of individual minds or the Intermental net's synthesis of a group perceptual state. This gives rise to a form of quasi-self awareness, where the computational properties of individual units are influenced by the state of the whole system. We believe that this experience of co-perceptual processes will lead to the emergence of new computational capacity not currently possible with current asynchronous networks linking incoherent (sequential) minds.

This Intermental network extends the familiar concept of a shared virtual environment where users respond to a changing system without direct knowledge of the thought processes (perceptual states) of other users. Rather than perceiving the other participants' perceptual states indirectly through their action on the physical environment, an Intermental network provides to each user direct awareness of the probable perceptual states of the other players at any moment.

B1.2 Motivating Applications

We have chosen three applications in which to focus our activities. In our basic proposal we will investigate the architecture of an Intermental net and produce a prototypical skeleton implementation. In this $300K per year effort for 2 years, we will not deliver any major demonstrations but rather working networks illustrating the basic principles. We have defined options in years 2 and 3 which would expand the effort and produce realistic demonstrations that could be based on our applications described below. However for our basic proposal, we will just use the applications to drive requirements for our architecture studies and our skeleton implementations.

Our three application areas are special operations environments, battlefield medical intelligence, and crisis management. Intermental nets have two types of application impact; tactical and strategic and each of our chosen areas can be impacted in both ways. Tactically, the Intermental net can enhance split second decisions by providing an instantaneous awareness of the group perceptual state. Integrating this over time, members of the Intermental net are continually aware of the evolving perceptual state of others addressing the same problem and this can lead to better strategic decisions.

Special operations environments present a highly challenging situational scenario to the project of gathering, processing and depicting time-critical information. Present age operations are especially harrowing to military personnel due to the insidiously stealth nature of modern threats. Presenting critical information to key personnel who are simultaneously enabled/empowered to respond effectively has long been a problem. Inadequate capacity to cope with various threats necessitates great resource and personnel allocation just to insure that the higher level priorities can go forward. This proposal responds proactively to this aim by specifying an "interventional informatic" methodology for comprehensively addressing the problem of connecting strategic personnel to emerging information and enabling their interaction with that information. We are currently developing a single, multimodally integrated interface system for the perceptibility and expressibility of massive quantities of critical information for the DARPA distributed robotics project. What we are proposing here will extend the focus beyond the issues of interfacing individual personnel by addressing issues of allowing the Intermental network control the robots.

We will research issues of providing the forward-deployed personnel with a linkage to the collective intelligence of command communication and other intelligence they need to be maximally effective. We will seek to develop the requirements of an Intermental network resource for augmenting the current efforts to instrument these key personnel with body-worn computers and communication tools.

It is also our intention to provide a powerful resource for battlefield medical intelligence where the traditional concept of telemedicine is augmented by an intelligent integrative system with distributed doctors and patients linked together coherently. As we describe later, traditional telemedicine has proved inadequate in many circumstances and the proposed Intermental system appears to offer a more promising approach to quality time-critical health care.

The final example is crisis management, which is quite similar to command and control and involves decision (judgment) support, which intrinsically mixes people and computers in real-time simultaneous interactions. One of our base technologies - the TangoInteractive collaboratory - was originally developed to demonstrate the value of commodity (web) technologies in cost effective high quality command and control applications. Tango has been chosen by the XII group to integrate a crisis management demonstration this May at Hanscomb air force base.

In general these applications lead to important requirements for the Intermental network which can be applied to a wide range of mission critical applications where the access to the intelligence of a collective of co-aware minds is essential to the optimal performance of the mission. We note that the P.I.'s have substantial experience with the chosen application areas.

B1.3 Core Technologies

This proposal is built on three key technologies where our team has world class capabilities. They will be scaled up and blended in this effort. The core efforts are in human-computer interfaces (HCI), collaborative systems, and parallel/distributed computing. We briefly discuss these separately in the following.

The Intermental HCI will be led by medical-neuroscientist David Warner MD. and biophysicist Edward Lipson Ph.D. and will aim at maximizing sensory throughput and expressional capacity of the individuals linked to this Intermental network. This component of our proposal, leverages a DARPA Bot-Masters project that is developing an advanced body-worn HCI aimed at controlling behavior of an array of distributed robots. This system uses novel hardware interfaces and software built on extension of NPAC's NeatTools system. NeatTools is a system developed to be adaptable to both the physiological and cognitive restraints of individual users and unique applications.

Naturally linking an individual to so much real-time information requires both optimizing the amount and the level of understanding (quality of information) in the transferred data. We will build all our software systems on top of commodity web technologies for as we have argued, (http://www.npac.syr.edu/users/gcf/HPcc/HPcc.html), this leverages the best available distributed information processing software.

A key Web based subsystem and our second major building block is the collaboration capability that will support the synchronous sharing of information (in the form of distributed objects) between the participants in the Intermental network. Here we will build on NPAC's TangoInteractive system designed and built by a team led by Marek Podgorny, which is not only a world-leading collaboration system but already has substantial support for our chosen application areas. As mentioned above, Tango was originally built as a proof of concept that pervasive Web technologies could be used in Command and Control and now is part of the XII crisis management project led by Lois McCoy of the National Institute for Urban Search and Rescue. So Tango already has built in capability for message processing; geographical information systems and other standard building blocks of command and control systems. Tango uses the powerful flexible event sharing collaboration models and allows transmission of raw messages or the definition of general system filters. As part of this proposal we will develop for Tango, the necessary new data fusion filters to aggregate data to form the higher level information processed by the HCI.

Our final technology area is distributed computing and, although this is very important, we have divided its use into two distinct phases. For the basic two year study, we will only need basic principles on the interface of parallel and distributed systems of the type outlined in section B2.3. However, a major thrust in this area will be necessary for the significant demonstrations planned in the options of year 2 and 3. Here we will need new distributed computing techniques, which will be built on the Globus toolkit and led by Ian Foster from Argonne National Laboratory. Globus is the leading world scale metacomputing activity and we will also get from the Globus team access to a large testbed for use in our demonstrations. Argonne and NPAC are already collaborating on the next generation National Grid metacomputing project of NCSA (National Computational Science Alliance) and we will leverage all this activity. In particular we will develop higher level web based computing on top of the Globus facilities by extending NPAC's (Wojtek Furmanski) WebFlow system. This is designed as a system integration tool but built using the pervasive technologies, which is an essential characteristic if we are to scale the Intermental network to very large sizes.

B1.4 Project Structure

Intermental systems, although present in many science fiction stories, are still largely unstudied for the main computer science effort has been "metacomputing" where one views humans as observers and users of large distributed networks of conventional computers. Thus this proposal will focus on the system architecture issues. These issues have to do with designing a network architecture, which addresses the phenomenological aspects of integrating human mental function with networked computational systems. We will need to explore the physiological restraints of rendering information to the human neurosensory system and transducing intentional bio-modulated energy patterns, which contain expressional information.

The core activity will lead to a demonstrable prototype system, but significant deployment will not be our main concern. We are proposing a core two year ($300K per year) effort to develop the basic ideas and system architectures that could lead to large-scale efforts in this arena.

Thus the basic proposal includes a simple small proof of concept demonstration with the components available in year 1 and the skeleton system shown in year 2. However, the options are designed to lead to a significant geographically distributed demonstration that will illustrate and test the basic system in one of the three chosen application areas.

For each of our subsystems, a key theme will be future scaling of current ideas to eventually support billions of simultaneous entities. Note that it is essential to our commodity architecture approach to Intermental systems that our Web-based software is universally runnable and that our HCI interfaces are both readily available (e.g. at Radio Shack) and very affordable so that our technology can be widely deployed. We are aiming at a cost of about $1000 per person for a wearable multi-perception/expression system with a total of over 30 different ports. This scaling of an Intermental system must take account not only of the issues of size but also the inevitable failures that will occur in a system of such magnitude. This scaling will involve subsystem dependent issues (for instance for HCI, we need to take advantage of multiple simultaneous human sensory subsystems) but we will use a common system-level approach based on novel hierarchical multi-resolution ideas to support integrated scaling for all three core technologies areas. As part of this initial proposal, we will demonstrate the hierarchical scaling of the Intermental system through at least two levels. We will study the tradeoff between branching factor and number of hierarchical levels using our application areas and systems architecture study.

We intend to use the standard Web mechanisms to popularize this project and so obtain volunteers to become the minds of a future highly scaled Intermental network. We expect children to find this project particularly exciting and these could use a set of simple collective (multi-player) games (developed separately as part of TangoInteractive activities) to interest them and complement the serious Special Operations, medical demonstrations, and crisis management.

Our skeleton system and optional demonstrations will initially involve conventional computers but can be extended to use systems built using other UltraScale systems, as these become available. One of our systems architecture efforts will be to study to special issues and opportunities opened up by the really novel UltraScale systems built from biological and quantum components. This will involve interacting with the other P.I.'s in this DARPA program.

In the following sections we describe issues in more detail: section B2 is the technical background respectively in human-computer interfaces, collaborative technologies, parallel and distributed computing and application requirements. Section B3 integrates these in a description of our understanding of the current state of Intermental networks while section B4 describes our project plan.

B2.1 Bot-Masters and NeatTools Background

In the following we give an overview of the Bot-Masters work now being initiated for the DARPA Distributed Robotics Program. This has key relevance to the Intermental network proposal as it will develop new approaches to HCI using the human body as an essential component of the interface to the human mind. It is anticipated that the Bot-Masters work will yield important information about the phenomenological aspects of integrating physiological systems with traditional computational systems. The Bot-Master effort will focus on applications, which will integrate with the DARPA wearable computer projects.

The research, prototyping, development and demonstration of technologies to support gathering, interpretation and use of data is a major challenge/obstacle. Such complex phenomena as battlefield dynamics easily overwhelm the best available information delivery systems. Presently, the large number of relevant gathering and controlling systems and techniques creates an interface problem of vast proportions. Funneling the greatest amount of high quality (prepared/processed) information to key personnel would be the ideal solution. It is therefore critical that technologies be researched and demonstrated for the representation and interpretation of multiple, diverse data sources, for human interpretation of those data sources, and for powerful, deliberate/calculated and rapid interaction with that information. The Bot-Master activity will produce a integrated interactive perceptualization interface system: integration of multiple sensory information for enhanced expressivity. This optimized single individual-computer linkage will be joined together as a network in this Intermental proposal. The Intermental activity will then feed back to the Bot-Master work as it will allow individuals new types of input - namely the additional perceptual dimensions corresponding to either the state of other individuals' minds or the Intermental net's synthesis of a group perceptual state.

Successful interfacing with external sensors and actuators is grounded on a high degree of context/need-specific malleability within the interface itself. Ability to transmit any information presented to the sensory technology back to expert personnel is primarily an issue of how intelligent the interface is in its reconfigurability, processing and rendering options. The Bot-Masters project will develop a system, which takes advantage of the entire body's neural capacities for sensation, processing and yielding to consciousness information. We intend a total of over 30 separate perception or expression channels. The Bot-Masters system's multi-sensory interfaces will then make maximal use of the 'feature extraction' properties of the human senses. That is, a neurological margin of maximally meaningful input from the outside can be rendered to each sensory system. The system will accordingly map to this margin for each of the involved senses; system resident artificial intelligence will allow the reconfigurability based on context need. 3-D visual displays, spatialized audiomorphic and tactile body surface significations and other methods will present information from the sensors to the expert. EMG-like sensors across muscle surfaces, foot activated pressure sensors, voice recognition, and specially signified body movements will all serve as methods for controlling actuators in real time as they perform work and send back information.

As well as the multiple sensory channels described above, the Bot-Masters project has two other key components. The first of these is the NeatTools software, which is a general cross-platform multi-threaded real-time data flow system for the flexible visual programming of networked or linked modules. It allows us to rapidly link peripherals, filter modules and diagnostic displays in a way that allow us to easily customize the different sensory components of the HCI. The NeatTools programming model has its roots in the formal Input/Output automaton model. In NeatTools, module abstraction is offered as a set of class methods to inter-module communication. Functional components (implemented as class objects) of a concurrent system are written as encapsulated modules that act upon local data structures or objects inside object class, some of which may be broadcast for external use. Relationships among modules are specified by logical connections among their broadcast data structures. Whenever a module has updated data and wishes to broadcast the change and make it visible to other connected modules, it should implicitly call an output service function which will broadcast the target data structure according to configuration of logical connections. Upon receiving the message event, the connected modules execute its action engine according the remote data structure. Thus, output is essentially a byproduct of computation, and input is handled passively, treated as an instigator of computation. This approach simplifies module programming by cleanly separating computation from communication. Software modules written using module abstraction do not establish or effect communication, but instead are concerned only with the details of the local computation. Communication is declared separately as logical relationships among the state components of different modules.

NeatTools is written in C++ for performance but can migrate to Java if appropriate when this language has sufficient performance. NeatTools has built-in linkage to the web and so it can be integrated with the overall web technology-based system software that we will build for the Intermental network.

Another important area of ongoing research is the filters that provide the "optimal" linkage between human and computer for each of the channels. Mostly we have relatively simple scaling and discriminator based systems but we are looking into more sophisticated approaches. In particular, we are experimenting with gesture-recognition modules based on either neural networks or the parallel cascade method, which provides an efficient way to represent and evaluate the input-output relation of a dynamic nonlinear system. It relates to the Volterra and Wiener theories of nonlinear systems, but has the significant advantage of being able to rapidly approximate dynamic systems with high-order nonlinearities. In this iterative method, the black-box filter for NeatTools is initially represented by a single cascade consisting of a dynamic linear element (L) followed by a static (zero memory, or instantaneous) nonlinear element (N), such as a polynomial. L is evaluated using a cross-correlation procedure between the experimental input and output (stimulus and response time series). Then N can be determined by a least-squares method. A basic reference for this approach is Korenberg MJ 1991. Parallel cascade identification and kernel estimation for nonlinear systems. Ann Biomed Eng 19: 429-456. As described later, we believe that future systems of this type will extensively use such heuristic optimizations to best match input and output. However this area will surely be the subject of much interesting important research and our goal is to design and prototype the overall system. We will typically use simple filters but allow the architecture to accommodate later improvements.

B2.2 Collaborative Systems: TangoInteractive Background

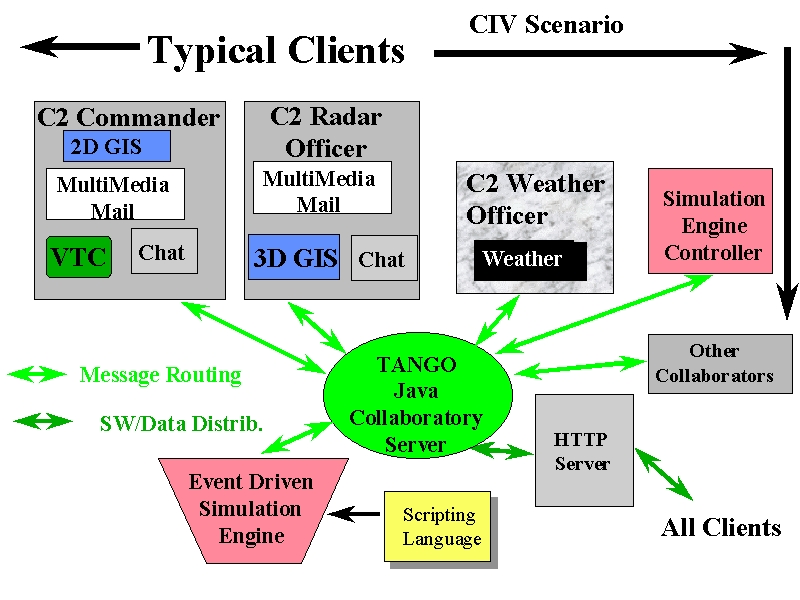

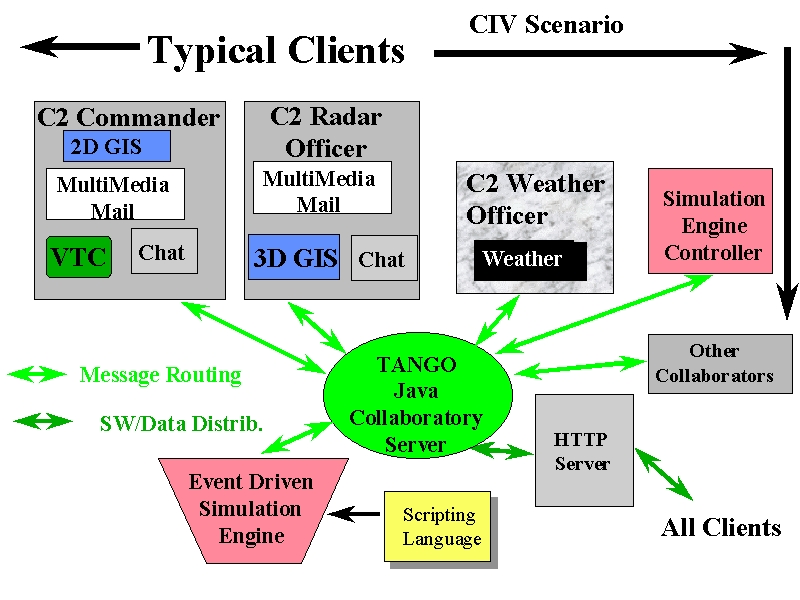

Web technology offers remarkable opportunities for sophisticated new environments linking people and computers. These are important in areas such as traditional video conferencing, telemedicine, command and control, crisis management, distance education, computational steering and visualization, and ``human in the loop'' distributed discrete event simulations. TangoInteractive from NPAC and Habanero from NCSA are probably the best-known general systems of this type. There are commercial systems but these are either specialized such as video conferencing systems (for instance CU See Me) or implement the restricted shared display model like Microsoft's NetMeeting. There is another important class of asynchronous collaboration systems like those built on Lotus Notes but these are not relevant for our coherent applications. TangoInteractive supports client applications written in any language and has over 40 application examples on its web site. (http://www.npac.syr.edu/Tango) TangoInteractive is relatively robust as it has been used extensively in significant distance education applications including a regular semester long class offered by Syracuse to Jackson State University. TangoInteractive implements a general model of sharing distributed objects with the objects replicated on each client and the message routing backbone ensuring that selected events specifying application state changes are properly distributed. This model for collaboration is both scalable and flexible as one can choose which change events to share. We illustrate its application below in the original command and control scenario for which TangoInteractive was originally built in a project supported by Rome Laboratory.

The basic TangoInteractive technology is a distributed set of Java servers and clients that communicate. The Java clients are applets launched from traditional browsers. The servers run session managers that log new users, and ensure a common worldview so that applications spawned on one client (white boards, lessons for distance education, etc.) are shared on all others. TangoInteractive avoids scalability problem by letting clients access all static data from unrestricted number of information servers, where information server is a generalized server supporting arbitrary contents and Web protocol. Examples of information servers are HTTP servers, audio and video servers, database servers, or computational servers.

This simple sharing of information is already a powerful environment, but Tango generalizes this by allowing client messages to be processed by arbitrary filters before they are passed on to other clients. This allows different users to receive different views of the same data - in a complex command and control or more general Intermental environment, it is clearly necessary to present the different decision makers with the different detail needed for their responsibility. Further, we can drive these filters by dynamic scripts that allow one to include simulated users for training and modeling applications. Note that the ``users'' (more accurately, clients) generating and receiving messages can be either people or computers. For instance computational steering in its simplest case corresponds to a single person interacting with a single computer. The overall TangoInteractive system is now a set of Java servers implementing a (distributed) event driven simulator that accepts events from either scripts, simulations, sensors or clients corresponding to computers or people.

One of the cornerstones of TangoInteractive design is the requirement that the application protocols remain strictly opaque to the message routing and distribution framework. This approach is similar to that used to design and implement the family of IP protocols. It is known that, in order to build a robust network, the network nodes should be stateless. In collaboratory environments the ideal stateless node is not possible, but the amount of information necessary to keep message router in operation should be minimized. Although TangoInteractive servers support a mechanisms of shared variables and locks allowing sophisticated application state sharing, the application specific variables are never interpreted by the message routers. This design allows us to separate development of the collaboratory backbone from development of applications. It also makes the system malleable and flexible in supporting arbitrary applications. We expect to capitalize on this flexibility in the design of Intermental computer.

B2.3 Parallel Distributed and MetaComputing Background

Parallel and distributed computing both address networks of computers with parallel systems focussing on the issues of low latency and high bandwidth coming from solving problems decomposed into closely coupled parts with tight synchronization constraints. Distributed systems use similar technologies to parallel computers but with different tradeoffs. For instance the World Wide Web is a distributed system using message passing linking tens of millions of entities together. The largest parallel MIMD systems have only 10,000 nodes as in ASCI machine at Sandia. However the web supports essentially independent asynchronous linkage of clients and servers while the Sandia machine has a specialized interconnect capable of supporting the decompositions of most important problems. The web entities are loosely coupled through exchange of email; worldwide search engines; separate clients accessing the same server and similar asynchronous indirect linkage. Problems on the Sandia machine would typically run in the classic MIMD loosely synchronous style with inter-node communication effectively synchronizing each computational entity with a frequency that could as high as a 100 KHz. (Parallel system message passing latencies are in the 1-30 microsecond range). So we see distributed systems focussing on scaling and issues of geographical distribution while parallel computers focus on synchronization and high performance. Parallel systems tend to be homogeneous and distributed systems heterogeneous. Metacomputers refer to hierarchically organized heterogeneous distributed systems with components that can themselves be parallel or distributed subsystems. Usually metacomputing corresponds to the use of such a set of computers to solve a single metaproblem. Problems suitable for metacomputing tend to consist of a set of coarse grain modules, which are coherently linked but with modest latency and bandwidth constraints that can be satisfied by the geographically distributed components of the metacomputer.

There are some important similarities and differences between an Intermental and traditional computer networks. Both will consist of nodes, which communicate by some form of message passing, and these messages will both supply information and implicitly or explicitly synchronize the nodes. However the individual minds of an Intermental net have a natural cycle time of a MHz and so the needed synchronization time for coherence is much longer for an Intermental than a parallel system whose nodes have today a few nanosecond clock cycle. Further the human body does have diverse sensory channels but these can be satisfied by an information flow in the range of 1 to 100 megabits per second, which includes the transfer of high quality (compressed) video streams. These communication needs can easily be satisfied by a good geographically distributed digital network. Thus our Intermental system will act like a parallel system but be realizable in a geographically distributed fashion. This is important as the individuals in a net are for most important applications (as in three cases we chose in this proposal) naturally at different locations. This "good news" about network requirements has a flip side. For digital computer systems, parallel computers have internal and external bandwidths that are comparable with the internal speeds being about a factor of ten higher. (Here we compare access to local and distributed memory). However the human mind is supported by a brain, which has a set of linked neurons whose internal connectivity is much richer and higher performance than the perceptual interface of the body. This implies that whereas parallel computers "just solve" the same problems as individual sequential computers, an Intermental network will not be put together to mimic an individual brain scaled in size by the number of members in the net. Rather the parallel Intermental system will gain its power by coherent group decision making of a different style to that used internally to the brain. We see this distinction in today's computer science; "neural networks" are modeled after the algorithms used internally to the brain; "expert systems" roughly embody the methodology used between brains. Our proposed Intermental net combines and improves these two fields of computer science with our higher performance linkage of minds implying new possibilities in group decision making. In summary we need to combine the techniques of both parallel and distributed systems for our proposed Intermental Net is a parallel linkage of minds joined to a distributed metasystem of parallel and sequential computers.

On a technical level, we emphasize that at all levels our system is a set of linked modules. This is seen inside the brain with linked neurons; in our wearable computer with the NeatTools software which links filters, body signals and computer peripherals; and in our parallel and distributed systems that communicate via messages. We are not stressing traditional distributed computing technology in our base proposal but it is included in our options, which include a significant geographically distributed deployment. This will probably use Argonne's Globus technology at a low level and NPAC's high level WebFlow interface. Globus is the best available toolkit for distributed systems. WebFlow can be thought of as a coarse grain version of NeatTools allowing either a visual or scripted interface to join modules together. Here the modules are full computer programs running perhaps on a parallel system and not the simple basic filters in NeatTools. However the similar programming paradigms suggests that we will be able to design a hierarchical system with the same networked module computing model at each level.

B2.4 Application Background: Interventional Telemedicine

We gave some details of the special operations application in section B1 and so here we elaborate on the medical application and in the following section on crisis management. As part of Warner's work as a leader of the national effort investigating technology for telemedicine, it has become clear that the appropriate concepts are distributed medical intelligence or interventional informatics. The latter is the intentional utilization of information and information technology to alter the outcome of a dynamic process, and will play an ever greater part in the future of telemedical services. Distributed medical intelligence services are emerging as viable entities supporting the practice of telemedicine. The transition from center-based medical services to internet-based distributed medical knowledge services is made feasible by the increased accessibility to the global communication infrastructure.

Technology supports human care and creativity. Humans relate to each other for many diverse purposes through technological elements. The development of medical applications for the Intermental network might even be thought of as a kind of prototyping of the next generation society resource. That is, as the structure and function of information systems and communication technologies become more perfected in their purposes of creating communication pathways between persons and groups they are actually going to be more and more like in their character to the physiological bases for communication within living systems. Further, as more is learned about the neurological bases of thought, language and communication, the more and more neurobiological in character will become the information technologies created to allow communications between human beings. The interface boundary between the human and the technology will become increasingly seamless to the point where it is virtually non-existent in some cases. In short, the intermental network represents real progress in social and systemic thinking about how to significantly change the face of current problems with multimedia communications technology. The communications infrastructure becomes a kind of nervous system for a newly emerging social body, a "Homo cyberiens".

B2.5: Application Background: Crisis Management

Command and Control is the military description of a general real time decision (or judgment) support environment involving a complex set of people, datasets, and computational resources. A critical characteristic is the need to make the ``best possible,'' as opposed to ``optimal,'' choice. In a civilian context, crisis management examined in a recent NRC study (``Computing and communications in the extreme: Research for crisis management and other applications,'' in Workshop Series on High Performance Computing and Communications. National Academy Press, Washington, D.C., 1996. Computer Science and Telecommunications Board, Commission on Physical Sciences, Mathematics, and Applications). Fox and Lois Clark McCoy of the National Institute for Urban Search and Rescue (NIUSR http://www.niusr.org) met at this working group and are collaborating in NIUSR's XII initiative. To quote from a draft ACTD proposal:

"The Xtreme Information Infrastructure (XII) has been undertaken by NIUSR to enable the development of a large-scale coordinated National Inter-Agency Response System. The XII Intelligent Data Fusion System resolves the problems of heterogeneous databases by utilizing commercial off the shelf (COTS) technology to provide a service layer that isolates the rigidity of legacy data applications from dynamic changes in real-world environments. Modules in the XII Intelligent Data Fusion System gather data from myriad collection of dispersed sources then intelligently transforms the heterogeneous databases, stovepipe applications, sensor-based subsystems and simulations, unstructured data, semantic content, into virtual knowledge bases."

XII is addressing the civilian side of many events of interest to DARPA including both biological and nuclear-based crises. The Intermental network is designed to aid both the tactical (what to do in next second to mitigate damage) and strategic aspects of crisis management. Using TangoInteractive with its web based infrastructure allow us to "plug" an Intermental net into an existing Tango based system. Tango is currently been adapted to use in the first XII demonstration which is currently planned for May at Hanscomb air force base. Tango's web structure allows it to interact with the myriad (stovepipe) information sources using standard web interfaces (such as HTML, VRML, CGI) as the interchange mechanism.

In the original command and control application shown in the figure of sec. B2.2, Tango linked military and civilian radar sensors and personnel tracking aircraft; NORAD as military command in execution stage; federal and state leaders at highest level (President and Governor); weather simulation to assess intercept possibilities, and in response stage, the dispersal of bacterial agents; FEMA as civilian command in response stage; and finally, medical authorities for expertise and treatment. TangoInteractive, as a general collaboratory, supplies digital video conferencing, text-based chatboards, and shared white boards, which are used by the participants to interact in a typical unstructured collaborative fashion. We are linking Tango with general backend information resources such as Lotus Notes and the EIS system commonly used today by crisis managers. Further this application needs shared two- and three-dimensional geographical information (GIS) systems. The filter capability of Tango is used to ensure that each participant only received appropriate information. For instance, the President and Governor would be spared a lot of the detailed shared displays used by those lower down the command chain. More generally, one wishes to present a given object in different ways to different participants. Thus, the radar and weather officers could use complex three-dimensional geographical information systems to study the event in detail. The expected weather and tracking data would be presented to others less involved in those areas, as simpler two-dimensional summaries. In the Intermental case, the filtering becomes subtler as we need to use it to customize the perceptual states perceived and expressed by each participant.

B3 Intermental Networks

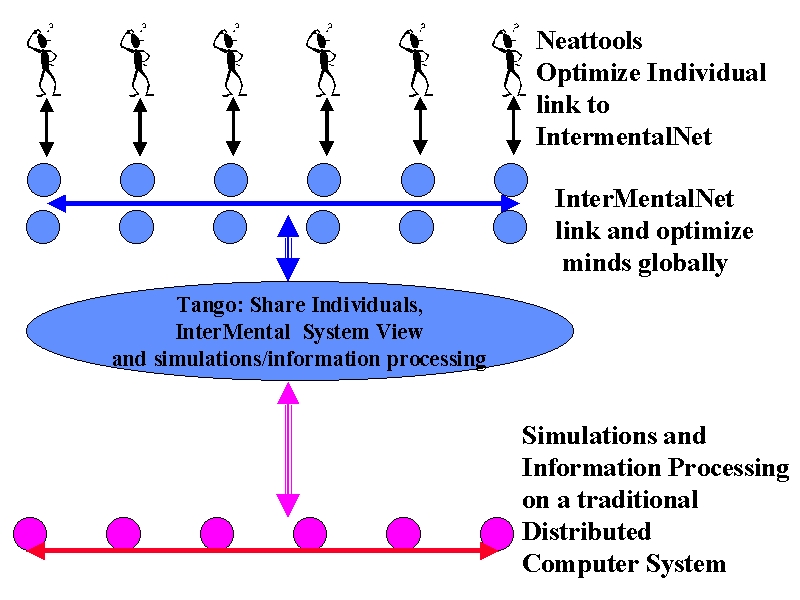

The figure below cartoons the system we imagine and we first detail the needed functionality at a high level and then discuss what exists today. Comparing this allows us to define what needs to be done as part of this proposal. We first note a point of terminology. We use perception for any entity (whether a mind, a computer or any combination thereof) to mean the "awareness" of input to that entity; correspondingly expression refers to output from the entity.

Eventually we imagine a worldwide link of potentially millions or billions of minds and artificial computers linked together as the Ultimate UltraScale system. The artificial computers are currently traditional PC's, workstations and higher-end digital machines —including massively parallel systems. Eventually these will include computers built using technologies stemming from the existing research in the UltraScale program. This conventional computer network uses distributed and parallel computing techniques to integrate information and simulation together to solve complex problems. These computer science issues have been and correctly continue to be the focus of major research and development activities. We are intending to use the results of parallel and computer systems research in this proposal in two ways. Firstly we will learn from it to design new approaches to the linkage of minds and secondly we will use it in integrating the distributed computer system to a Intermental network of minds. We will not however duplicate existing distributed or parallel computer research programs here but solely focus on issues raised by the Intermental net.

The individual minds are linked to client computers using multi-sensory rendering techniques for enriched perception and multi-modality expressional interfaces. The NPAC/MindTel team has built a prototype of such a multi-modal interface and will extend it as part of DARPA's new Bot-Master project. This uses the novel visual-programming environment NeatTools to rapidly build and experiment with the network of filters and peripherals, which form the interface. We have started experimentation with some of the techniques useful for optimizing the interface to maximize the effectiveness of the human mind in exploiting the body's ability to create enriched perceptions and generate complex expressions for an "optimal result".

The Bot-Masters project focuses on a single individual perceiving information, which could be from the computer network shown at the bottom the figure. Our Intermental system needs two additional components. Firstly it links multiple minds together and, using a generalization of the TangoInteractive system shares the different objects in the net. This includes the results of the computer processing but also the perceptions and expressions of each mind. This ability of each individual to see the input data and output expressions of others already provides an interesting coherent system of the type proposed. The decisions of individuals can and will be modified by seeing the "thoughts" of others in real time. Thus already this system illustrates the key coherent simultaneous linkage of minds that characterizes an Intermental network. However we can generalize the Intermental concept by adding the middle set of computers whose task is to optimally synthesize into one or more "summaries" the individual expressions of each object in the net, whether it be a mind or conventional computer. In a parallel computing analogy, this synthesis is "just" the output of the parallel system formed by the Intermental net. Just as optimizing an individual computer-human interface is a long difficult research program, we assume that optimizing the synthesis of the expressions of multiple minds and computers will be a long ongoing activity. So in this proposal, we cannot except to solve this problem but rather we will produce a proof of concept system and some pointers to fruitful future activity. We expect that either simple statistical combination or more sophisticatedly, the usual general heuristic algorithms such as genetic algorithms, neural networks and simulated annealing will be the basis of our and others' initial attack on this critical hard problem. We term these approaches generically physical optimization as they are based on optimization methods used by physical systems.

Looking at our system in total, we see optimized human-computer interfaces linked together to produce one or more synthesized view which result can itself be synthesized with the expressions of one or more distributed computer systems. The collected expressions of individual and syntesized entities form a set of distributed objects which are shared by TangoInteractive with all participants. This sharing forms a nonlinear feedback loop of new perceptions which of course give rise to time dependent expressions which come from this parallel coherent Intermental net.

So we bring to the table

B4 Project Plan

Above we have listed the ideas and existing hardware and software artifacts which we believe can be used to significantly develop the concept of an Intermental net and deliver a system architecture and prototype implementation. We have divided the effort of achieving this into the seven tasks given in section D. Each task will be led by one of the four key scientists identified in section H.

Task 1 is led by Fox and Warner and includes the overall system architecture research and an investigation of the special issues associated with linking Intermental nets with the other UltraScale computing approaches. Task 2 is led by Warner and Lipson and encompasses the human-computer interface issues - researching the hardware and software options and system integration as well as the human cognitive ability to perceptualize multiple sensory sources which include representations of the perceptual state of other minds. Task 3 is led by Podgorny and represents the study of issues in collaborative technologies as they are relevant to Intermental nets. This work will include general studies and specific development of the TangoInteractive system. This integration software will be used for the prototype hardware and software system that will demonstrate our Intermental system architecture. This activity is part of Task 3. Task 4 is led by Fox and includes issues in parallel and distributed computing. Currently we do not expect this to be a large effort and will focus on accurate use of insights from parallel computing in the Intermental net architecture. Task 5 concerns elaboration of the application requirements and is also led by Fox. This will grow in importance in the options, which are designed to be exercised in years, 2 and 3 and allow a major demonstration of the use of an Intermental net in a rich application scenario. For these options, we intend to add to our current team. We have identified Furmanski from NPAC and Foster from Argonne as they are collaborating on appropriate high performance Web-based metacomputing. We will also add expertise from the chosen application field and will work with DARPA in choosing the application with good defense relevance and appropriateness for use of Intermental nets. The final tasks 6 and 7 led by Fox and Warner include system integration and reporting. Here we tie together the other tasks and will present results in a timely fashion with significant use of the web to disseminate information.

C Deliverables

D Statement of Work (SOW)

We will develop a realistic and comprehensive plan to research, identify, test and evaluate critical issues in Intermental networks and decompose them into key thrusts of our project. We will set up an appropriate management and reporting structure. We will establish two ongoing overall research areas, which will interact with the main thrusts in HCI, Collaboration, parallel/distributed computing, application requirements, and systems integration. These two research areas are described in tasks 1.1 and 1.2.

We will analyze conventional and new UltraScale technology under development and evaluate the different computational power, bandwidth and latency issues as they affect integration with an Intermental Net. We will include consideration of natural computational paradigms for these technologies and analyze their suitability for direct support of the Intermental net in the areas of filtering or integration. This will include collective computing paradigms such as generalized cellular automata and neural networks which could be particularly relevant for close integration with an Intermental system. The analysis will also include both the impact of other technologies on the Intermental net and vice versa.

We will research and identify concepts that are related to Intermental nets. This will include Virtual Environments, Complex Systems studies of emergent behavior in Computer (worldwide) networks, parallel neural networks and other areas to be identified. We will build lessons learned into our system architecture.

We will develop a realistic and comprehensive plan to research, identify, test and evaluate critical issues of the human-computer interface as it relates to the Intermental network. This plan will be guided by the overall intent to understand and exploit the various aspects of the physiological and cognitive phenomena of human-computer interaction within the Intermental network. The plan shall include defining the research areas and methodology required to gain necessary knowledge in the following areas:

During the planning phase the HCI team will interact closely with the individuals working on the "Bot-Masters" wearable computer project in order to identify areas of synergy where redundant efforts may be reduced and to establish a mechanism for sharing information between the two programs.

We will research neurophysiological capabilities and functional limits of the human user interfacing with multisensory rendering systems. We will utilize computer to human interface technology being developed for the "Bot-Masters" project. Research will be focused on actual usability in order to better understand how best to integrate a comprehensive set of visual, aural and tactile rendering devices into the interactive perceptualization system. We will devise methods to give the user an integrative experiential interaction with the complex data types such as they may encounter in operational participation within the Intermental network. We will develop research methods to evaluate human interaction with interface technology that renders computer information onto multiple human sensory systems to give a sustained perceptual effect (i.e., a sensation with a context). We will research the human's ability to consistently combine these different rendering modalities, thus providing insight for developing spatial coding of the rendered information. We will research and evaluate how the implementation of vision, hearing, and touch technologies can allow for simultaneous sensation of multiple independent and dynamic data sets that can be integrated physiologically into a single perceptual state.

The research of technologies to support interpretation of data is a major challenge. We will research users cognitive abilities as they interact with various methods of rendering complex phenomenon. We will test and evaluate the user's ability to consistently integrate information from a number of relevant input systems. We will research operability limits of various perceptualization techniques. We will evaluate the operational limits of representation and interpretation of multiple, diverse data sources. We will evaluate the consistency of human interpretation of these data sources.

We will research and evaluate methods and limits of integrating multiple data input devices into a single system. Our research will develop evaluation methods for such inputs as, bioelectric signal detectors, dynamic bend sensors, pressure sensors, audio and video digitizers and other devices. We will also assess the limits of usability of traditional input devices such as mouse, joystick and keyboards to determine when interface complexity precludes their use as primary inputs. We will research and evaluate the users' ability to integrate several input systems so as to have a multiplicity of simultaneous interaction options. We will research the capacity for filtering and combining data streams from the various human to computer input devices. We will research the capacity for developing user-defined gestures for controlling various parameters of the interface system. This work will include delivery of a prototype wearable computer based on the Bot-Masters effort but modified for use in the Intermental net.

Task 3 Collaborative Technologies Thrust

Task 3.1 Core Collaborative Technologies

We shall research and evaluate the systems, architecture, and design issues of both general collaborative technologies and the specific TangoInteractive system, as they are relevant for the Intermental net. We will include issues of scaling, fault tolerance and performance in this study as well as the ability to link diverse heterogeneous systems such as the Intermental minds, hybrid UltraScale computers and general filters. We will implement those capabilities in TangoInteractive that are identified by this task as needed for the prototype system of task 3.3.

Task 3.2 Intermental Net Specific Filters and Technologies

We will combine the expertise of the collaboration and HCI thrust members to decide on the specific details of the linkage of TangoInteractive collaboration system with the modified Bot-Master suits being developed in task 2. This will lead to design of interfaces to allow flexible integration of the base collaboration system, the suits, and filters to produce either individual or group perceptual states. These interfaces will be designed to meet application requirements of task 5.

Task 3.3 Prototype Integrated System

We will use TangoInteractive and the Bot-Master technology to produce a skeleton implementation of an Intermental net. This will include "place-holders" for all critical system functionality but not include detailed application specific modules. This skeleton will allow effective demonstrations of the concepts of an Intermental net and be designed so that it can be extended to the optional demonstration for a specific application.

Task 4 Parallel and Distributed Computing Thrust

This task will examine parallel computing architectures and their performance analysis and programming paradigms to suggest effective approaches to Intermental nets viewed as a message-parallel systems of minds. Results of this task will feed directly into tasks 3.3 and 6.1.

The second subtask is the research and evaluation of distributed computing technologies, as they are needed to integrate an Intermental net into a global information processing system. This activity will feed into task 6.1 and the proposed options, which involve a significant application specific demonstration.

Task 5 Application Requirements and Demonstrations

We will continue interaction with experts in the three chosen areas - special operations, medical intelligence and crisis management - to identify opportunities and requirements for Intermental nets. We will interact with DARPA and other organizations to suggest other application areas, which we can use to drive the architecture and motivate an Intermental net. In particular we will study education and training where Intermental technology could lead to new ways of linking teachers and pupils.

This task is the main deliverable as it includes the overall Intermental System architecture studies, which will encompass and synthesize results from all the other tasks. We will present the architecture in a way so that future research and development activities can be planned. The system architecture includes a prototype software system as task 3.3

We shall research and evaluate the systems, architecture, and design engineering required to research, prototype, and demonstrate interactive environments that combine new ways to render complex information with advanced computer to human input devices. These will render content specific information onto multiple human sensory systems giving a sustained perceptual effect, while monitoring human response in the form of physiometric gestures, speech, eye movements, and various other inputs. Further they will provide for the measurement of the same.

We shall research and evaluate the systems, architecture, and design issues in the use of collaborative technologies to support Intermental nets and their use in critical applications. This activity will include study of other approaches than the specific TangoInteractive technology used in Task 3. We will examine functionality, performance, fault tolerance and architectures that will best support both today's systems and future scaling to millions or billions of linked minds.

Task 7 Reporting

We will integrate our findings among the different thrusts and present conclusions as to how HCI, collaboration, and distributed computing impact the Intermental net. We will incorporate application requirements and integration with other UltraScale technologies into our reports. The report will include a comparative analysis of Intermental nets with other forms of computers and virtual environments for different application areas. Our system architecture will be described in a forward-looking fashion so that future demonstrations and research in this area can be evaluated. In particular they will allow evaluation of our proposed optional activities.

Reports will be presented at DARPA meetings, conferences and in scholarly journals.

E Schedule of milestones

90 days

1) Set up a management plan and identify major team members. Deploy or purchase necessary computer resources.

2) Development of a realistic research plan outlining the research areas and methodology required to gain necessary knowledge relevant to the development of the Intermental network

3) Establish contacts suggested by DARPA to enhance productivity of effort.

120 days

1) Establishment of the resources, (equipment location and personal) to perform the necessary research on the major human computer interaction and collaborative systems efforts as they relate to the Intermental network.

2) Set up initial Web site with major identified local and world web resources

One year

Generation of initial report outlining the findings of the research in all aspects and making recommendation for further efforts in this area including data needed for evaluation of year 2 option.

18 months

1) Integration of knowledge gained from HCI research with the collaboration and distributed computing efforts.

2) Initial Demonstration of Skeleton system integrating TangoInteractive with Bot-Masters wearable computer.

F Technology Transfer

We have a clear technology transfer path as NPAC which is a research unit of Syracuse University has close ties with Industry as we believe much of the best technology innovation in the rapidly changing Web and distributed object arenas is and must be performed outside academia. NPAC is funded by the state of New Year to help technology transfer to the state Industry. In this project, all the products will be available in the public domain although we are subcontracting to two small businesses MindTel and WebWisdom.com to ensure that we can use the best commercial infrastructure. One of the important advantages of the TangoInteractive system is that it is not just a research system but significant effort has and will be put into making it a relatively robust deployable system. This proposal has a far-out vision of millions and billions of linked minds but the concepts could have near term value either commercially or to DoD in the applications we reviewed in section B. We expect the commercial and DoD interest to be in modest systems of a few linked minds and we will explore and support technology transfer in this area. The University will as always retain commercialization rights and license these respecting Government rights.

G Comparison with other ongoing research

Our concept of the eventual worldwide implementation of Intermental nets can be seen in the work on Internet Ecologies by the Dynamics of Computation group at Xerox Parc (http://www.parc.xerox.com/spl/members/hogg/). This work by Hogg and Huberman (see: Strong Regularities in World Wide Web Surfing by Bernardo A. Huberman, Peter L.T. Pirolli, James E. Pitkow, and Rajan M. Lukose) uses a complex system approach that we believe is a good framework. However we believe their work largely addresses the current Web without the essential coherence of linked minds postulated in the Intermental net. Similarly the Global Brain (http://pespmc1.vub.ac.be/GBRAINREF.html ) of Peter Russell also follows this approach which should be included in our analysis but is an essentially different idea. The Global Brain work of Mayer-Kress of the Illinois Beckman Institute (http://www.ccsr.uiuc.edu/People/gmk/Publications/pub-intl.html ) is a significant contribution which we will build on. We note that chapter 3 of Fox's book "Parallel Computing works!" presents a general complex systems approach to computation that will be a useful integrating methodology.

As discussed in section B, our proposal is synergistic but different from the activities in shared virtual environments. These will be a critical addition in our optional demonstration phase. Note that Intermental nets modulate perceptual state by linking the minds of other users of the net; virtual environments modulate perceptual state by an accurate rendition of the physical system as it is modified by the other users. Work in this area includes the CAVE (http://www.evl.uic.edu/EVL/index.html) VRML and the distributed simulation (DIS,HLA) activities (see for instance http://www.stl.nps.navy.mil/dis-java-vrml/ ). We work on the interface with VRML and HLA for the DoD HPCC Modernization PET program and so are familiar with some of these activities. See Exploring JSDA, CORBA and HLA based MuTech's for Scalable Televirtual (TVR) Environments by G.Fox and W. Furmanski et al. to appear in proceedings of workshop on OO and VRML in VRML98 Conference Monterey Feb. 16-19,98.

TangoInteractive is a synchronous collaboratory system. Its development has been sponsored by DARPA in the project "Collaboratory Interaction and Visualization" managed by Rome Laboratory. TangoInteractive is very tightly integrated with Web infrastructure, with main system modules executing as within the browser. The system effectively turns web browser into communication device.

At present, there are no commercial offerings of software functionally comparable with TangoInteractive. Current commercial collaboratory systems, such as Microsoft NetMeeting, Netscape Conference, or one of the systems offered by startup Internet companies such as VocalTec or Contigo, offer only minimal and rather simple-minded support for collaboratory process. None of these systems is extensible or scalable. It is unlikely that any such system can serve as a foundation for incremental development of complex group interaction patterns involved in the current proposal. The number of academic research projects in the field of synchronous collaboration is quite significant, so our selection of the reference projects is somewhat arbitrary. We will compare TangoInteractive only against reasonably similar systems.

The system most similar to TangoInteractive in scope, goals, and technological means is the project ISAAC at University of Illinois and NCSA (http://www.ncsa.uiuc.edu/SDG/Projects/ISAAC) . The collaborative kernel of ISAAC is Habanero - a Java collaboratory framework developed by an excellent NCSA team with which we collaborate through the NSF PACI program. Habanero and TangoInteractive are functionally very similar, and their architectures, built around central collaboratory server, have much in common. Both systems are relatively mature, with a number of collaborative modules exceeding three dozen, and with certain user base inside and outside DoD community. Following similar concepts in session management and floor control, Habanero and TangoInteractive differ however in some important aspects. Habanero is not strictly a Web system: it is implemented as a set of Java applications. In contrast, the TangoInteractive client is a part of the web browser. Tango's architecture is much more difficult to implement as the system developers are critically dependent on the ever-changing properties of Netscape and Microsoft products. The benefit of such a system is, beyond software downloadability, and a more extensive use of web infrastructure. TangoInteractive application modules, mostly implemented as applets, use web servers as data sources. Importance of the separation of event and data distribution channels has been already explained in Section B2.2. Further, TangoInteractive supports the truly unique capability of writing collaborative lightweight JavaScript applications. This gives our system rather remarkable rapid prototyping capability. TangoInteractive also supports interfaces to applications implemented in arbitrary language, including C/C++, a capability that dramatically expands the system's application base.

Both Habanero and TangoInteractive support or will support in the near future certain critical functionality expected in an advanced collaboratory system, including session recording and playback, rich multimedia support, collaborative access to web databases, and a rich set of presentation and annotation tools for many data formats. In our opinion, Habanero and TangoInteractive represent comparably developed examples of advanced Internet collaboratory systems.

The MASH toolkit, which is a part of the UC Berkeley "Scalable Architecture" project (http://mash.cs.berkeley.edu) is a premiere Internet collaboratory framework. MASH builds on the foundation created by MBONE collaboratory tools. This framework is very different than TangoInteractive. Multicast is a central ingredient of the MASH project around which entire architecture is built. Tango, with its central server architecture, is architecturally very different from MASH. MBONE/MASH is certainly a valid approach to the collaboration problems. We believe that both architectures will be used in future systems. TangoInteractive uses multicast and certain protocols that resulted from the MBONE effort, such as RTP and RTCP, to implement distribution of multimedia data streams while preserving its central server for the control function.

Another very interesting Internet collaboratory project is Rutgers' DISCIPLE (http://www.caip.ruther.edu/multimedia/groupware). DISCIPLE is more a framework for development of collaboratory applications than a system for there seem to be only few publicly available applications. However, DISCIPLE's strength lies in its use of the newest Java event delegation model to provide linkage between collaboratory applications and collaboratory server, known as "collaboration bus". TangoInteractive has been developed before this technology was available. We note however that the next generation of our system is scheduled for public release in April '98 and will be basis of Tango's use in this proposal. This release uses advanced Java technologies such as Reflection API to support intra-nodal application communication (necessary for implementation of truly integrated system) and event delegation model to implement API for TangoInteractive applications. Although DISCIPLE and TangoInteractive have different global architectures, both systems will be able to support collaboration transparency. Applications, implemented as Javabeans, will be able connect automatically to the system, without being modified from their stand-alone version. This implementation of collaborative transparency is different than the implementations proposed earlier. In the most trivial form collaboration transparency is supported by shared display systems such as Microsoft NetMeeting or Intel's ProShare. A different approach has been proposed in the Old Dominion's Java Collaborator Toolkit (JCE http://capella.ncsl.nist.gov/JCE/demo.html}. JCE postulates replacement of the standard AWT (Abstract Windows Toolkit) by a collaborative version. The modified AWT broadcasts each GUI event to all other instances of the application. Hence, application only needs to be recompiled with the new toolkit to become collaborative. This solution is in somewhat inflexible as the developers cannot decide which functionality should be shared. The event delegation model used with Javabeans applications allows for implementation of both collaboration-unaware and collaboration-aware applications. In TangoInteractive, these two modes supplement the traditional message-passing API used to implement applications not implemented using Javabeans methodology.

In general, we feel that TangoInteractive supports most of the collaboratory functionality available in the leading research collaboratory systems and that it uses the most recent web technology to implement this functionality. The growing user base community of the system, currently including Army Research Lab, Waterways Experimental Station, Wright-Patterson AFB, Ohio Supercomputing Center, and National Center for Supercomputing Applications, as well as a number of defense contractors and commercial companies, makes TangoInteractive a competitive system on the national level.

H List of key personnel

Fox obtained undergraduate and Ph.D. degrees in mathematics and physics respectively at Cambridge University. He was on the faculty at Caltech for 20 years where he also held administrative roles as Executive Officer for Physics and associate Provost for Computing. Fox is currently Director of NPAC and Professor of Computer Science and Physics at Syracuse University. He is an internationally recognized expert in the practical use of computing technology in a variety of areas. He has published 300 papers and 3 books. While at Caltech he pioneered the development of the systems and application software for the hypercube parallel computer. Fox was also a co-founder (with Hopfield, Mead and Van Essen) of Caltech's Computation and Neural Systems Program. Much of his interest and expertise in complex systems was developed as part of this program. He has led and participated in several DARPA and other DoD research and development contracts. He has always emphasized the role of applications in driving and validating technology. His book "Parallel Computing Works" which describes the use of HPCC technologies in 50 significant application examples, illustrates this. A new book "Building Distributed Systems on the Pragmatic Object Web" describes many of the base systems technologies, which will be used in this proposal. Fox directs InfoMall, which is focused on accelerating the introduction of modern information technology into New York State industry and developing the corresponding software and systems industry. With Marek Podgorny, Fox has led the very successful development of the TangoInteractive collaborative system for distance education and command and control. Selected papers are:

1. Fox, G.C., Johnson, M.A., Lyzenga, G.A., Otto, S.W., Salmon, J.K., Walker, D.W., Solving Problems on Concurrent Processors, Vol. 1, Prentice-Hall, Inc. 1988; Vol. 2, 1990.

2. Fox, G. C., Messina, P., Williams, R., Parallel Computing Works! , Morgan Kaufmann, San Mateo Ca, 1994.

3. Fox G.C., Furmanski W., "Computing on the Web, New Approaches to Parallel Processing, Petaop and Exaop Performance in the Year 2007", IEEE Internet Computing 39-46, March/April 1997

4. Fox G.C., Furmanski W., "Parallel and Distributed Computing using Commodity Web and Object Technologies", Proceedings of PARCO Conference, Bonn, September 1997, to be published.

5. Fox, G. C. "Approaches to Physical Optimization" ,in Proceedings of 5th SIAM Conference on Parallel Processes for Scientific Computation, pp 153-162, March 25-27, 1991, Houston, TX, J. Dongarra, K. Kennedy, P. Messina, D. Sorensen, R. Voigt, editors, SIAM, 1992.

Warner, a medical neuroscientist, has an MD from Loma Linda University and is the director of the Institute for Interventional Informatics and has gained international recognition for pioneering new methods of physiologically based human-computer interaction. Warner's research efforts have focused on advanced instrumentation and new methods of analysis which can be applied to evaluating various aspects of human function as it relates to human-computer interaction, this effort was to identify methods and techniques which optimize information flow between humans and computers. Warner's work has indicated an optimal mapping of interactive interface technologies to the human nervous system's capacity to transduce, assimilate and respond intelligently to information in an integrative-multisensory interaction will fundamentally change the way that humans interact with information systems. Application areas for this work include quantitative assessment of human performance, augmentative communication systems, environmental controls for the disabled, medical communications and integrated interactive educational systems. Warner is particularly active in technology transfer of aerospace and other defense derived technologies to the fields of health care and education. Specific areas of interest are: advanced instrumentation for the acquisition and analysis of medically relevant biological signals; intelligent informatic systems which augment both the general flow of medical information and provide decision support for the health care professional; public accesses health information databases designed to empower the average citizen to become more involved in their own health care; and advanced training technologies which will adaptively optimize interactive educational systems to the capacity of the user. Selected Publications are:

2. Warner D, Tichenor J.M, Balch D.C. (1996) Telemedicine and Distributed Medical Intelligence, Telemedicine Journal 2: 295-301.

3. Warner, D., Anderson, T., and Johanson, J. (1994). Bio-Cybernetics: A Biologically Responsive Interactive Interface, in Medicine Meets Virtual Reality II: Interactive Technology & Healthcare: Visionary Applications for Simulation Visualization Robotics. (pp. 237-241). San Diego, CA, USA: Aligned Management Associates.