Internet Computing and the Emerging Grid

IAN FOSTER

When the network is as fast as the computer's internal links, the machine disintegrates across the net into a set of special purpose appliances.

-- Gilder Technology Report, June 2000.

Internet computing and Grid technologies promise to change the way we tackle complex problems. They will enable large-scale aggregation and sharing of computational, data and other resources across institutional boundaries. And harnessing these new technologies effectively will transform scientific disciplines ranging from high-energy physics to the life sciences.

The data challenge

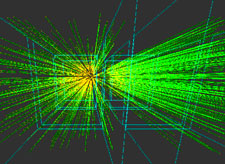

| CERN |

|

| Each of LEC's heavy-ion collision experiments may yield up to 2000 subsiduary particles, observed via 180,000 data channels. |

Fifty years of innovation have increased the raw speed of individual computers by a factor of around one million, yet they are still far too slow for many challenging scientific problems. For example, detectors at the Large Hadron Collider at CERN, the European Laboratory for Particle Physics, by 2005 will be producing several petabytes of data per year -- a million times the storage capacity of the average desktop computer. Performing the most rudimentary analysis of these data will probably require the sustained application of some 20 teraflops (floating-point operations) per second of computing power. Compare this with the 3 teraflops per second produced by the fastest contemporary supercomputer, and it is clear that more sophisticated analyses will need orders of magnitude more power.

Cluster and conquer

One solution to the problem of inadequate computer power is to 'cluster' multiple individual computers. This technique, first explored in the early 1980s, is now standard practice in supercomputer centres, research labs and industry. The fastest supercomputers in the world are collections of microprocessors, such as the 8,000-processor ASCI White system at Lawrence Livermore National Laboratory in California. Many research laboratories operate low-cost PC clusters or 'farms' for computing or data analysis. This year's winner in the Gordon Bell Award for price-performance in supercomputing achieved US$0.92 per megaflop per second on an Intel Pentium III cluster. And good progress has been made on the algorithms needed to exploit thousands of processors effectively.

Although clustering can provide significant improvements in total computing power, a cluster remains a dedicated facility, built at a single location. Financial, political and technical constraints place limits on how large such systems can become. For example, ASCI White cost $110 million and needed an expensive new building. Few individual institutions or research groups can afford this level of investment.

Internet Computing

Rapid improvements in communications technologies are leading many to consider more decentralized approaches to the problem of computing power. There are over 400 million PCs around the world, many as powerful as an early 1990s supercomputer. And most are idle much of the time. Every large institution has hundreds or thousands of such systems. Internet computing seeks to exploit otherwise idle workstations and PCs to create powerful distributed computing systems with global reach and supercomputer capabilities.

The opportunity represented by idle computers has been recognized for some time. In 1985, Miron Livny showed that most workstations are often idle1, and proposed a system to harness those idle cycles for useful work. Exploiting the multitasking possible on the popular Unix operating system and the connectivity provided by the Internet, Livny's Condor system is now used extensively within academia to harness idle processors in workgroups or departments. It is used regularly for routine data analysis as well as for solving open problems in mathematics. At the University of Wisconsin, for example, Condor regularly delivers 400 CPU days per day of essentially "free" computing to academics at the university and elsewhere: more than many supercomputer centres.

But although Condor is effective on a small scale, true mass production of Internet computing cycles had to wait a little longer for the arrival of more powerful PCs, the spread of the Internet, and problems (and marketing campaigns) compelling enough to enlist the help of the masses. In 1997, Scott Kurowski established the Entropia network to apply idle computers worldwide to problems of scientific interest. In just two years, this network grew to encompass 30,000 computers with an aggregate speed of over one teraflop per second. Among its several scientific achievements is the identification of the largest known prime number.

|

Figure 1 Performance of the Entropia network. |

The next big breakthrough in Internet computing was David Anderson's SETI@home project. This enlisted personal computers to work on the problem of analysing data from the Arecibo radio telescope for signatures that might indicate extraterrestrial intelligence. Demonstrating the curious mix of popular appeal and good technology required for effective Internet computing, SETI@home is now running on half-a-million PCs and delivering 1,000 CPU years per day -- the fastest (admittedly special-purpose) computer in the world.

|

| The SETI@home internet computing project has become the world's largest distributed computer. |

Entropia, Anderson's United Devices, and other entrants have now gone commercial, hoping to turn a profit by selling access to the world's idle computers -- or the software required to exploit idle computers within an enterprise. Significant venture funding has been raised, and new "philanthropic" computing activities have started that are designed to appeal to PC owners. Examples include Parabon's Compute-against-Cancer, which analyses patient responses to chemotherapy, and Entropia's FightAidsAtHome project, which uses the AutoDock package to evaluate prospective targets for drug discovery. Although the business models that underlie this new industry are still being debugged, the potential benefits are enormous: for instance, Intel estimates that its own internal "Internet computing" project has saved it $500 million over the past ten years.

What does this all mean for science and the scientist? A simplistic view is that scientists with problems amenable to Internet computing now have access to a tremendous new computing resource. All they have to do is cast their problem in a form suitable for execution on home computers -- and then persuade the public (or an Internet computing company) that their problem is important enough to justify the expenditure of "free" cycles.

The Grid

But the real significance is broader. Internet computing is just a special case of something much more powerful -- the ability for communities to share resources as they tackle common goals. Science today is increasingly collaborative and multidisciplinary, and it is not unusual for teams to span institutions, states, countries and continents. E-mail and the web provide basic mechanisms that allow such groups to work together. But what if they could link their data, computers, sensors and other resources into a single virtual laboratory? So-called Grid technologies2 seek to make this possible, by providing the protocols, services and software development kits needed to enable flexible, controlled resource sharing on a large scale.

Grid computing concepts were first explored in the 1995 I-WAY experiment, in which high-speed networks were used to connect, for a short time, high-end resources at 17 sites across North America. Out of this activity grew a number of Grid research projects that developed the core technologies for "production" Grids in various communities and scientific disciplines. For example, the US National Science Foundation's National Technology Grid and NASA's Information Power Grid3 are both creating Grid infrastructures to serve university and NASA researchers, respectively. Across Europe and the United States, the closely related European Data Grid, Particle Physics Data Grid and Grid Physics Network (GriPhyN) projects plan to analyse data from frontier physics experiments. And outside the specialized world of physics, the Network for Earthquake Engineering Simulation Grid (NEESgrid) aims to connect US civil engineers with the experimental facilities, data archives and computer simulation systems used to engineer better buildings.

The creation of large-scale infrastructure requires the definition and acceptance of standard protocols and services, just as the Internet Protocol (TCP-IP) is at the heart of the Internet. No formal standards process as yet exists for Grids (the Grid Forum is working to create one). Nonetheless, we see a remarkable degree of consensus on core technologies. Essentially all major Grid projects are being built on protocols and services provided by the Globus Toolkit4, which was developed by my group at Argonne National Laboratory in collaboration with Carl Kesselman's team at the University of Southern California's Information Sciences Institute, and other institutions. This open-architecture and open-source infrastructure provides many of the basic services needed to construct Grid applications5 such as security, resource discovery, resource management and data access.

The Future

Although Internet and Grid computing are both new technologies, they have already proven themselves useful and their future looks promising. As technologies, networks and business models mature, I expect that it will become commonplace for small and large communities of scientists to create "Science Grids" linking their various resources to support human communication, data access and computation. I also expect to see a variety of contracting arrangements between scientists and Internet computing companies providing low-cost, high-capacity cycles. The result will be integrated Grids in which problems of different types can be routed to the most appropriate resource: dedicated supercomputers for specialized problems that require tightly coupled processors and idle workstations for more latency tolerant, data analysis problems.

Web links

Popular internet computing projects:

- SETI@home setiathome.ssl.berkeley.edu

- Folding@home http://www.stanford.edu/group/pandegroup/Cosm/

- Compute-against-Cancer www.parabon.com/cac.jsp

- Fight AIDS@home http://www.fightaidsathome.org/

- Great Internet Mersenne Prime Search mersenne.org

- Casino 21: Climate simulation http://www.climate-dynamics.rl.ac.uk/

Internet computing companies:

- Entropia http://www.entropia.com/

- United Devices http://www.uniteddevices.com/home.htm

- Parabon http://www.parabon.com/

- Popular Power http://www.popularpower.com/

Grid projects:

- Grid Physics Network project http://www.griphyn.org/

- European Data Grid grid.web.cern.ch/grid

- Particle Physics Data Grid http://www.ppdg.net/

- Network for Earthquake Engineering Simulation Grid http://www.neesgrid.org/

- The Globus Project http://www.globus.org/

- The Global Grid Forum http://www.gridforum.org/

References

- Mutka, M. & Livny, M. The Available Capacity of a Privately Owned Workstation Environment. Performance Evaluation 12, 269-284 (1991).

- Foster, I. & Kesselman, C. (Eds). The Grid: Blueprint for a New Computing Infrastructure. Morgan-Kaufmann (1999).

- Johnston, W., Gannon, D. & Nitzberg, W. Grids as Production Computing Environments: The Engineering Aspects of NASA's Information Power Grid, Proc. 8th Symposium on High Performance Distributed Computing, IEEE Computer Society Press (1999).

- Foster, I. & Kesselman, C. Globus: A Toolkit-Based Grid Architecture. In ref. 2, pages 259-278. Morgan Kaufmann Publishers (1999).

- Butler, R., Engert, D., Foster, I., Kesselman, C., Tuecke, S., Volmer, J., & Welch, V. Design and Deployment of a National-Scale Authentication Infrastructure. IEEE Computer (in press).

- The Anatomy of the Grid: Enabling Scalable Virtual Organizations. Foster, I., Kesselman, C., Tuecke, S. www.globus.org/research/papers/anatomy.pdf

Dr. Ian

Foster is a Senior Scientist and Associate Division Director in the

Mathematics and Computer Science Division at Argonne National Laboratory, and Professor of Computer Science at the

University of Chicago. He co-leads the Globus Project, a

multi-institutional R&D project focused on the development and

application of Grid technologies. His research is supported by the U.S. Department of Energy, National Science Foundation,

Defense Advanced Research

Project Agency, and National Aeronautics and Space Administration. More

information is available at www.mcs.anl.gov/~foster. Ian Foster is also a member of

the Scientific Advisory Board of Entropia, Inc.

Dr. Ian

Foster is a Senior Scientist and Associate Division Director in the

Mathematics and Computer Science Division at Argonne National Laboratory, and Professor of Computer Science at the

University of Chicago. He co-leads the Globus Project, a

multi-institutional R&D project focused on the development and

application of Grid technologies. His research is supported by the U.S. Department of Energy, National Science Foundation,

Defense Advanced Research

Project Agency, and National Aeronautics and Space Administration. More

information is available at www.mcs.anl.gov/~foster. Ian Foster is also a member of

the Scientific Advisory Board of Entropia, Inc.