TeraGrid QA Testing and Debugging

Abstract

The mission of the TeraGrid Quality Assurance (QA) working group is to identify and implement ways in which TeraGrid software components/services and production deployments can be improved to reduce the number of failures requiring operator intervention that are encountered at TeraGrid resource provider sites. The TeraGrid QA group utilized FutureGrid in the below experiments: GRAM 5 scalability testing, and GridFTP 5 testing.

Intellectual Merit

Shows FutureGrid as a testbed for sophisticated distributed system middleware testing

Broader Impact

Tested new TeraGrid middleware; illustrated how to use FG as a testbed for middleware testing.

Use of FutureGrid

For middleware testbed

Scale Of Use

UF Nimbus cluster

Publications

Results

This success story illustrates collaboration with TeraGrid and the ability to acquire short-term access to FutureGrid resources in order to perform QA testing of software.

The mission of the TeraGrid Quality Assurance (QA) working group is to identify and implement ways in which TeraGrid software components/services and production deployments can be improved to reduce the number of failures requiring operator intervention that are encountered at TeraGrid resource provider sites. The TeraGrid QA group utilized FutureGrid in the below experiments:

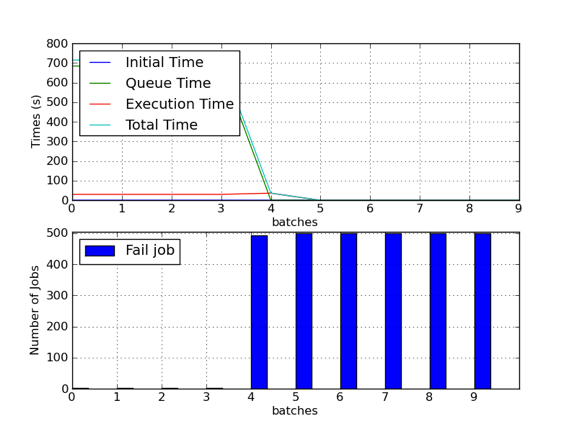

GRAM 5 scalability testing: The TeraGrid Science Gateway projects tend to submit large amounts of jobs to TeraGrid resources usually through the Globus GRAM interfaces. Due to scalability problems with GRAM, members of the Science Gateway team at Indiana University extracted code from their GridChem and UltraScan Gateways anddeveloped a scalability test for GRAM. When GRAM 5 was released, GRAM 5 was deployed to a TACC test node on Ranger and scalability testing was started. Due to the possibility that the Ranger test node might be re-allocated, the group created an alternate test environment on FutureGrid in July 2010. A virtual cluster running Torque and GRAM 5 was created on UF’s Foxtrot machine using Nimbus. Access to the virtual cluster was provided to the Science Gateway team as well. One problem that was debugged on the virtual cluster was numerous error messages showing up in a log file in the user’s home directory. This did not effect job execution but took up space in the user’s home directory and was reported to the Globus developers. The effort is summarized in the following Wiki page at http://www.teragridforum.org/mediawiki/index.php?title=GRAM5_Scalability_Testing

GridFTP 5 testing: In order to test the newest GridFTP 5 release, the TeraGrid QA group again turned to FutureGrid and instantiated a single VM with GridFTP 5 on UCSD’s Sierra and UF’s Foxtrot machine in October 2010. They then verified several of the new features, such as data set synchronization and the offline mode for the server. No major problems were detected in this testing, though a bug related to the new dataset synchronization feature was reported. The results are summarized on the TeraGrid Wiki at http://www.teragridforum.org/mediawiki/index.php?title=GridFtp5_Testing.

Some results:

Futuregrid is a resource provider for

Futuregrid is a resource provider for